Welcome to the first blog for Generative Security. Before we begin, I want to make sure you know what you're going to get when you read this blog. Here, we will talk about what we see going on in the world of Generative AI security and most importantly how it affects you. We may talk about new ideas, how old methods apply to this new world, and what we are seeing in the market. It's also important to mention what you won't see. While we of course live talking about our products and services, you won't see marketing material in this blog. We'll talk about what we're building and why, of course, but in the larger context of what's going on today. If that sounds interesting, read on. And feel free to sign up to get updates as we post new content. Now without further ado...

If you ask 10 people what does "Generative AI security" mean, you'll probably 7 or 8 different answers, plus 1 or 2 "I don't know" thrown in there as well. So it makes sense to start with trying to put together a common language we can all use. This way we are talking with, and not at each other.

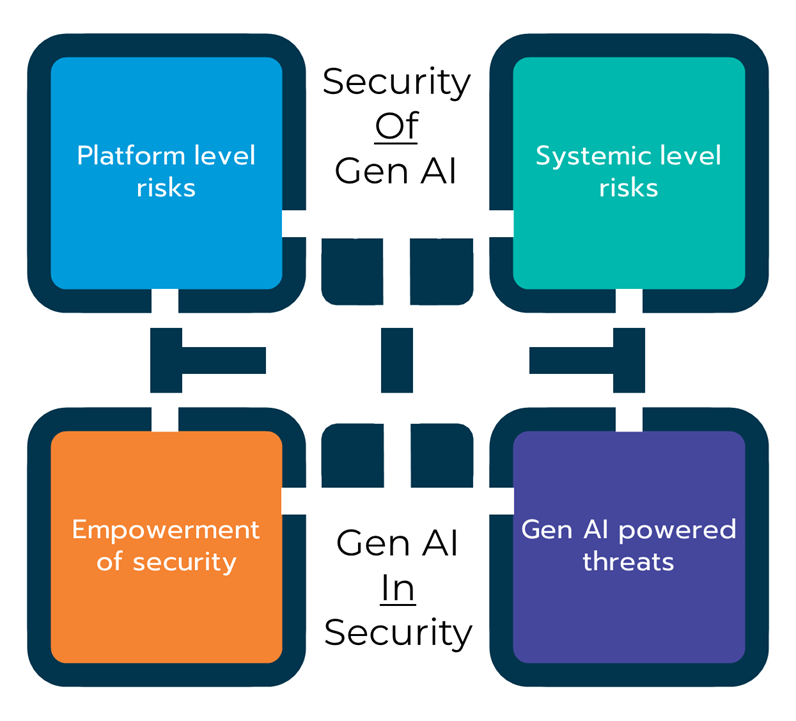

Let's break down the different intersections between security and generative AI. First, when speaking about security in and around generative AI, you have to figure out if you're talking about security of generative AI implementations, or, are you talking about generative AI in security tools. I've seen a lot of cases where 1 person is talking about the first while the other person is talking about the second. It's never pretty.

Once you've properly defined which of these you mean, there's another split to consider. Under the umbrella of "security of generative AI", you have two considerations - the individual platform risks, and the enterprise-level aggregate risk. Think of the risks each implementation might have, what data it has access to, etc... vs. the systemic risk of aggregating all the custom builds and 3rd party implementations together. This underscores the difference between developer/infrastructure security owned risks, and governance-owned risk involving the lines of business, finance, and legal.

For "generative AI in security", most organizations are focused on specific use cases around empowerment. Things like - how to empower/automate SOC analysts, better internal detection mechanisms in your 3rd party tooling, and faster remediation of security issues are consistent areas of interest. Additionally, for some organizations, there are concerns about how generative AI is empowering adversaries' tools, like Deep Fake technology and more robust malware development. However, for most organizations there's still time before this is a major concern. Of course, each of these areas can be broken down further, but at a high level this will get you 90% of the way to consensus.

Why is it so important to have this common language though? Well, without it we’ve seen email threads that lasted weeks only to discover people were not even talking about the same thing, leading to long delays and wasted money, not to mention unnecessary conflict between teams. When agreeing up front about the language, it makes it that much easier to come to agreement about the problem you are trying to solve.

So for you, and your organization, which of these 4 blocks is the most important?

About the author

Michael Wasielewski is the founder and lead of Generative Security. With 20+ years of experience in networking, security, cloud, and enterprise architecture Michael brings a unique perspective to new technologies. Working on generative AI security for the past 3 years, Michael connects the dots between the organizational, the technical, and the business impacts of generative AI security. Michael looks forward to spending more time golfing, swimming in the ocean, and skydiving... someday.