The world of generative AI security is constantly evolving right in front of our eyes. And while it can sometimes be hard to keep up with just what's right in front of our faces: the marketing, the new companies, the LinkedIn spiels (mine included); I find it's critical to try to look around corners and see what's happening in the academic world and with the boots on the ground (so to speak). That's because the challenge isn’t just theoretical anymore - to get security past the door we need to be aware of our impact on performance, modularity, and the practical tools that allow us to keep pace with innovation without becoming a bottleneck. So this week we're going to look at two of the many things that have come out recently that I think will have lasting impact.

One of the more interesting academic papers recently is the defense framework detailed in the recent paper PromptArmor: Simple yet Effective Prompt Injection Defenses. There are two core ideas I want to highlight. First, the idea that current defenses "remain limited in one or more of the following aspects: utility degradation, limited generalizability, high computational overhead, and dependence on human intervention." We've been talking about this as one of the major issues with today's SASE-based proxy model for real-time security all the way back in May of 2025 with our blog Accelerating generative AI security with lessons from Containers. Second, they propose leveraging a separate, off-the-shelf "guardrail LLM" to detect and remove malicious prompts before they ever reach your backend agent. They highlight how off-the-shelf models can be highly effective in this role too.

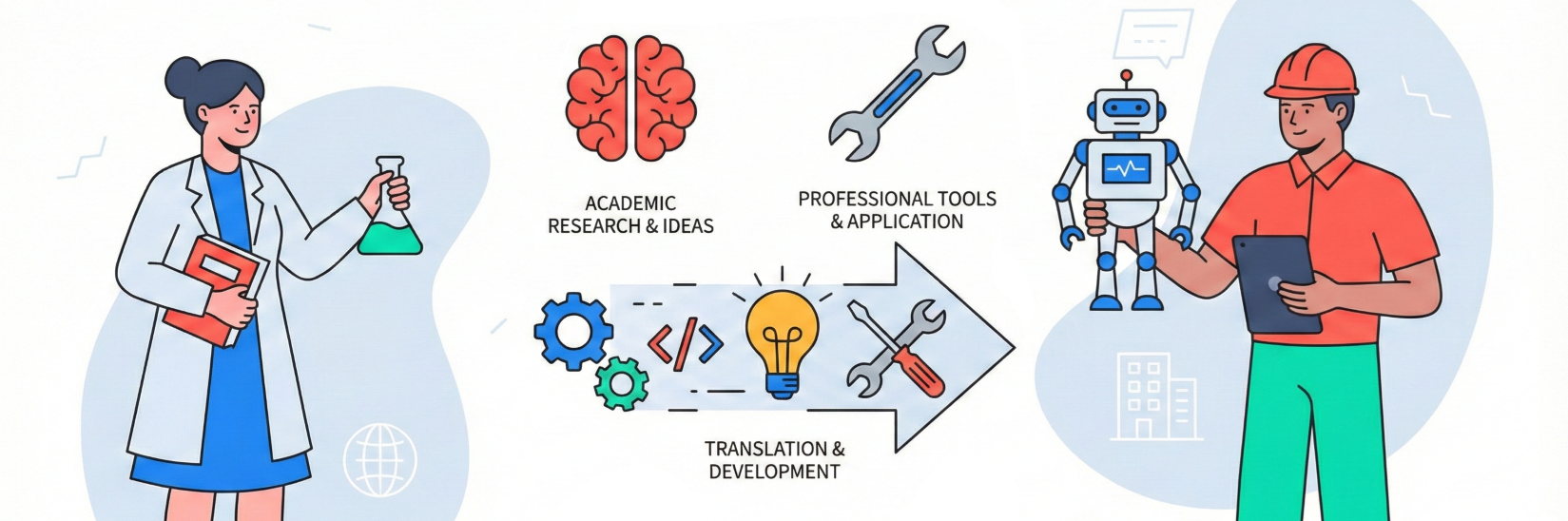

This approach is the spiritual successor to the sidecar architecture we discussed in our container security blog. Just as tools like Falco emerged to monitor container syscalls in real-time - offloading the heavy lifting from the primary application - PromptArmor functions as a standalone preprocessing layer. It treats the incoming data as untrusted by default, matching the "Assume Breach" mentality we’ve championed when we brought Zero Trust Security into the Agentic AI equation.

But why does this difference matter for enterprises today? Performance and cost. In the past, security often meant retraining models or layering on expensive, latency-heavy guardrails that "lobotomized" the model's utility with high retraining costs and time delays. PromptArmor flips the script. By using smaller, highly efficient models like o4-mini, it achieves false positive and false negative rates below 1% on the rigorous AgentDojo benchmark. This means security teams can maintain a high-velocity environment where the backend LLM is free to be creative and effective, while the "sidecar" security agent handles the dirty work of sanitizing inputs.

Crucially, the PromptArmor architecture is intended to be modular and easy to deploy. It doesn't require modifying your existing agents. I think there's work to be done to have the agent sit next to, instead of in front of the agent, but we're actually working on that inside of AWS at the moment (stay tuned for more) 😉 . This is the future of AI security: a network of specialized agents - one for logic, one for security, - all working in parallel to ensure that a malicious instruction hidden in a transaction history or an email doesn't hijack the entire system.

While research like PromptArmor gives us the "how," the upcoming [un]prompted conference in March is where the industry is defining the "what next." Looking at the agenda for the San Francisco event, a clear theme emerges: security is moving toward rigorous, automated testing.

We are seeing a shift from "vibes-based" security to empirical evaluation. Sessions like Meta’s talk on Measuring Agent Effectiveness to Improve It and Google’s focus on Automating Defense signal that the era of hoping our system prompts are "good enough" is over.

At Generative Security, we’ve always argued that traditional AppSec tools - designed for predictable code - fail when faced with the non-deterministic nature of LLMs. The [un]prompted agenda reflects this, with talks on AI-Native Blueprints for Defensive Security (Adobe) and Establishing AI Governance Without Stifling Innovation (Snowflake). The practitioners on the ground are no longer asking if they should use AI; they are asking how to build the "evals" that prove their defenses work in production.

This shift toward testing is essential because it allows us to "jump the S-curve." Instead of waiting for a breach to happen to learn our lesson, we are proactively red-teaming our own agents as seen in Block’s Operation Pale Fire session.

However, even as we get better at technical defenses like prompt injection removal, there is a looming concept that isn't being talked about enough: Social Engineering Chatbots.

Many people in the security space assume that "Social Engineering" in AI is just another term for prompt injection or jailbreaking. It isn't. To understand the difference, we have to look at what is being targeted:

A Social Engineering chatbot isn't necessarily trying to "hack" the LLM it runs on. Instead, it is a bot specifically designed - often by malicious actors - to manipulate human psychology at scale. This could be a bot that "vibe-codes" its way into a user's trust to extract MFA codes, or a conversational agent that mimics a CEO's conversational style so perfectly that it bypasses the "uncanny valley" that usually alerts employees to a scam.

We need to start distinguishing between attacking the AI (Injection/Jailbreak) and AI attacking the human (Social Engineering). Defenses like PromptArmor are fantastic for the former, but the latter requires an additional set of threat models and abuse cases. We can still use the same architecture to check for historic and real-time social engineering attacks, but we need a specific library, often by industry, to check for. This allows us, as we move into an agentic future where agents talk to agents, to reduce the risk of agents Social Engineering each other as well. Talk about the next great frontier!

Keeping up with this breakneck pace of change is a full-time job. If you’re looking for a consistent, expert voice that cuts through the noise of AI security, we highly recommend following Chris Hughes and his work at Resilient Cyber. I often follow his perspective, and find it a great way to stay informed on the generative AI cybersecurity landscape.

The path forward is clear: we need to embrace modular, high-performance security sidecars like the ones described in the PromptArmor research, including with augmentation for multi-session and social engineering attack support. We need to commit to the rigorous testing frameworks being showcased at [un]prompted. And we must widen our lens to see the emerging threats of social engineering that go beyond simple text injections. By leaning into these updates and looking toward practitioners like Chris Hughes, we can turn AI security from a "risk to be managed" into a "competitive advantage to be wielded."

If you want to dive deeper into sidecar security models, join our conversations around Zero Trust and generative AI security, or connect with us about helping you achieve this transformation, please reach out to questions@generativesecurity.ai.

About the author

Michael Wasielewski is the founder and lead of Generative Security. With 20+ years of experience in networking, security, cloud, and enterprise architecture Michael brings a unique perspective to new technologies. Working on generative AI security for the past 3 years, Michael connects the dots between the organizational, the technical, and the business impacts of generative AI security. Michael looks forward to spending more time golfing, swimming in the ocean, and skydiving... someday.