If you believe the hype, the evolution towards agentic architectures promises a revolution in generative AI’s ability to deliver on complex activities. We introduce what an agentic, tool-based architecture might look like in our lessons from container security blog, and in our blog about Trust in the age of agentic tools, we touch on the impact of agentic architectures in security, specifically around how these agents are likely to come form different suppliers, different maturities, and with different access rights. For the next few weeks, I want to combine these two concepts into a single thread, and dive deep into how the future of AI security needs to be steeped in Zero Trust principles.

To start, I want to offer a primer on Zero Trust that a good colleague of mine, Lee Newcombe, put together years ago: What Does Zero Trust Mean to Cyber Risk. What’s important is to acknowledge there are some key tenets of Zero Trust: “Assume Breach” in a component-based design, context-based decision making, and continuous authentication and authorization enforcement. To make these real, let’s look at a recent security issue that impacted almost everyone, Log4Shell. Log4Shell underscored the need for robust, built-in defenses inside your environment, not just at the end.

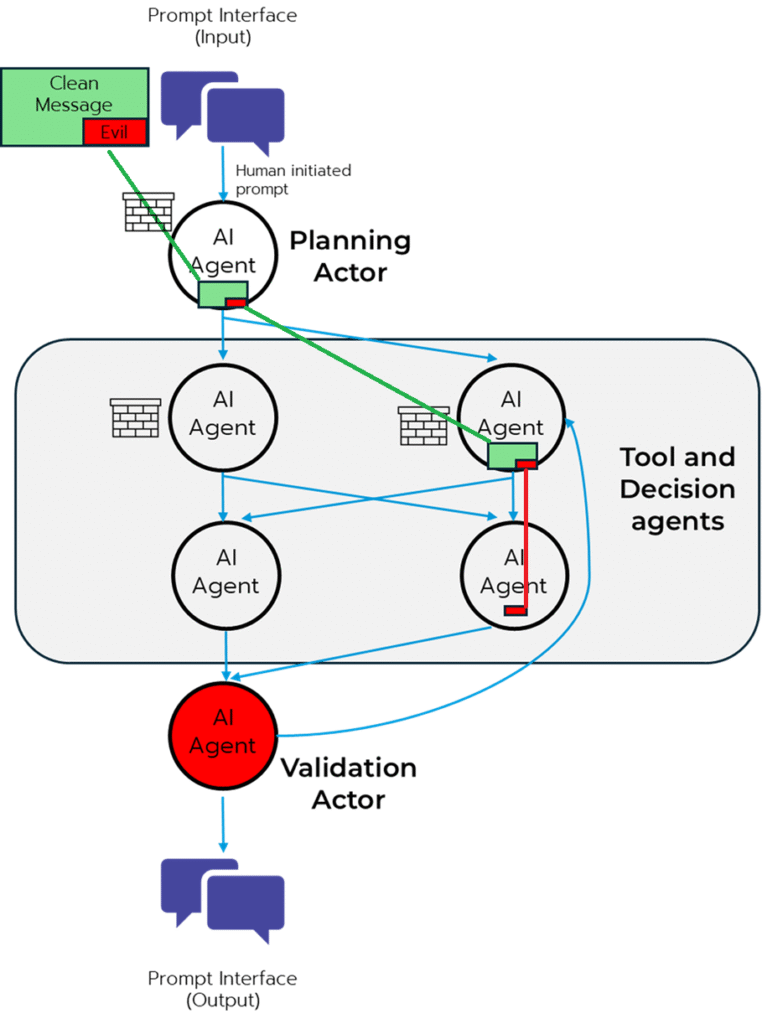

Log4Shell highlighted how a single vulnerability buried far away from traditional user interactions could cause widespread issues. In the context of agentic AI, where agents integrate with various tools and execute well beyond the traditional user interface, a similar risk is significant. An agent, designed for a specific purpose, might inadvertently or through subtle manipulation pass a malicious set of instructions downstream to other agents that don’t have the necessary guardrails or protections causing them to fetch compromised data, or execute an unverified command. The intricate web of interactions within an agentic ecosystem means that a vulnerability in one component could quickly propagate.

But this is not all doom and gloom; this presents a unique opportunity to embed security into the very fabric of these new architectures. We can proactively design resilient systems by adopting the Zero Trust principles.

Never Trust, Always Verify

Zero Trust fundamentally shifts our security paradigm from implicit trust within perimeters to an "never trust, always verify" approach. For agentic AI, this translates into a few critical practices:

Now let’s be clear, this introduction merely scratches the surface. Over the next few weeks, we'll publish a multi-part series delving deeper into the practical application of Zero Trust to agentic AI workflows, exploring these tenets. As you may have noticed from our other blogs, we’re not going to talk about JUST the technology, but the impact to how organizations should approach agentic AI as well. I’m hoping to have a guest author or two mixed in there as well, so keep watching.

The future of AI is undeniably agentic, and its secure development hinges on our commitment to Zero Trust. If you want to get a head start on the conversations around Zero Trust and generative AI security (agentic or not), or connect with us about helping you achieve this transformation, please reach out to questions@generativesecurity.ai.

About the author

Michael Wasielewski is the founder and lead of Generative Security. With 20+ years of experience in networking, security, cloud, and enterprise architecture Michael brings a unique perspective to new technologies. Working on generative AI security for the past 3 years, Michael connects the dots between the organizational, the technical, and the business impacts of generative AI security. Michael looks forward to spending more time golfing, swimming in the ocean, and skydiving... someday.