A few weeks ago we talked about the top 3 lessons generative AI security can learn from the cloud. And just as generative AI is having its cloud moment, there’s still more we can learn from the evolution of security in other technologies. If you look at the lessons from cloud, a lot of the problems weren’t just technical, but had a very human element to them, or at least to their solutions. If we want to get more granular in the technology, one of the most instructive parallels comes from the adoption of containers. The journey from trying to secure early Docker deployments to today’s Kubernetes-native security stacks offers some clear lessons on how we can continue to “jump the S-curve” with generative AI security.

1. Standard tools might work for a while, but they aren’t fit for purpose

Sometimes I see people who love their tool and want it to fit everywhere. II can’t help but remember the poor woman in this video as one solution fits all: https://www.tiktok.com/@tired_actor/video/6912855387788102918. But one of the hardest lessons from container security was the realization that traditional security tools weren’t able to handle the novel constraints and security needs of containerized environments. Attempting to force-fit endpoint detection and antivirus designed for resource heavy applications onto lightweight containers often led to performance bottlenecks, incomplete visibility, and ultimately a false sense of security. More than just agents, things like identity, patching, and logging all had to be redesigned to meet the inherent nature of containers.

Let’s look at the traditional host-based intrusion detection systems (HIDS) for parallels. These systems, designed to monitor file system changes and process execution on persistent servers, struggled with the rapid churn and lower resources of containers. Heavy HIDS agents attempting to log every ephemeral container's lifecycle and internal processes quickly became ineffective, and very expensive. Eventually, tools like Falco by Sysdig emerged. Tools like this were purpose-built to monitor container syscalls in real time and send the reporting to appropriate datalakes, offloading logging, analysis, and proposed remediation. Signals could still be sent to and by the tools to act in real time, but now with far less overhead.

Similarly, we cannot expect traditional application security tools that are designed for consistent and predictable inputs and outputs to fully secure generative AI. Security needs to get better at both black box and white box style security testing to address the unique attack surfaces of large language models (LLMs). This means is not just putting prompt firewalls (sorry to all my network friends) on the perimeter of our LLM, nor trusting static guardrails (sorry to my more application-minded security friends) inside our LLM to protect our data. Security evaluation must occur at the input, output, and also intermediate steps throughout modern agentic processes. If this sounds a bit like we’re talking about Zero Trust, good, because while we’re not there yet, we should start thinking about this now. Additionally, repeated and continuous testing of our models, our pipeline, and our training data are essential to protecting stochastic systems. Later on, we might see security become more statistic-based instead of proof-based, but I wouldn’t expect that to happen anytime soon given compliance and regulatory requirements.

2. Non-deterministic is the new ephemeral

The ephemeral nature of containers fundamentally changed how we thought about patching and vulnerability management. The traditional model of "patching in place" on long-lived servers became irrelevant for containers that were spun up and down in seconds. This forced security teams to shift left, baking security into the CI/CD pipeline rather than relying on runtime patching. Standard reporting practices of vulnerabilities per server month over month were quickly found to be inadequate. Instead, vulnerability scanning, dependency analysis, and configuration hardening were integrated into the CI/CD pipeline, ensuring that every new container deployed was built from a secure, preferably immutable foundation.

Understanding that generative AI applications are, by their very nature, non-deterministic means we must change the security model again. Today, common processes like executing security test once (if we’re lucky) inside the application deployment pipeline, doing dependency checks manually before going into production, patching static rules in place, and having a secure edge all fall apart for different reasons with generative AI. Dynamic and Static security tests may have different outcomes if run multiple times, dependencies inside our generative AI models are likely to change frequently for the next few years, patching guardrails inside of LLM’s frequently will start to incur excessive costs and resources leading to pushback, and evaluation at the edge will not be able to predict behavior behind it. This doesn’t mean we throw away all of these tools, but we must adapt them or replace them to meet today’s needs.

One approach is to adopt a "golden model" approach. This involves creating immutable, versioned, and thoroughly tested generative AI models that have undergone rigorous adversarial testing, bias detection, and safety evaluations before deployment. This includes robust model governance, versioning, and the ability to roll back to previous, known-good model states, similar to how we manage container image registries. Looking into the agentic future, we then supplement these protection mechanisms with real-time, embedded security agents inside our agentic workflows. <sales> At Generative Security we’re actively building for this future, building security agents that can be integrated into agentic frameworks like LangGraph to execute security evaluation at each step of your logic in parallel to your processing, saving cost and time while maintaining high reliability and resiliency. </sales> Monitoring the results of all these test are, of course, performed with generative AI to give your security teams the information they need quickly to address real-time and fresh threats inside your environments.

3. The next evolution of security architecture (again)

All of this leads to the, once again, discovering a new architecture for security. Looking over these past lessons, we see an evolution from host-based agents that became too expensive either computationally or monetarily, to firewalls and proxies that worked until they became risks to resiliency or too expensive/burdensome in distributed environments, to today’s modern sidecar architecture, with security existing as a separate process or container with its own resources but control in the environment. So what would this look like for generative AI?

Today, we have static guardrails that are embedded into LLM training processes, and security tooling and logic embedded into the prompt response logic. However, while at Google Next I overheard a data scientist day “The more guardrails you put into an LLM, the more useless it becomes.” This is a harbinger of the pushback security can expect to see. Not to mention that the training of guardrails, and every time an update occurs the retraining will become prohibitively expensive. Eventually, we will get kicked out of the generative AI models themselves, or at least have a reduced ability to inject ourselves.

Next, we already see companies implementing real-time prompt proxies and firewalls to protect the input and output between the end-user and models themselves. This works for now, but it has its limitations. It improves upon the ability to update protection against new threats without costing an arm and a leg to retrain, but it can treat the LLM or other generative AI model as a black box. Should attacks like the very basic rotate character attack get past the firewall, the model is defenseless. As we also move into agentic frameworks, with agents talking to agents, the architectural burden and (licensing) cost of putting these proxies between each agent becomes unacceptable. So what does a sidecar model look like for agentic frameworks?

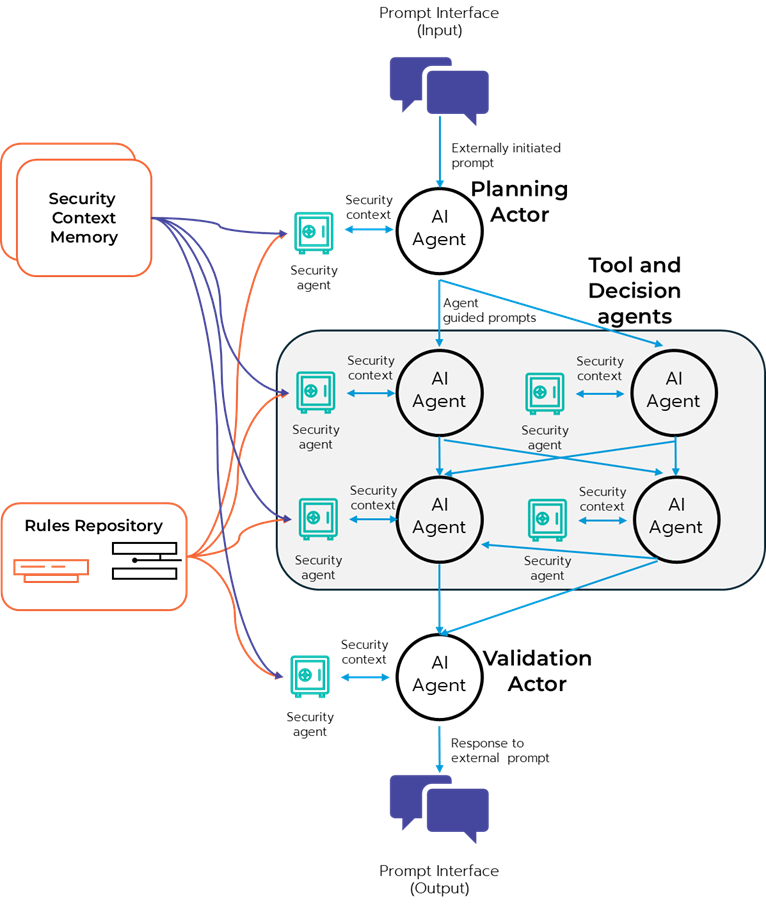

First, let's use a model for what an agentic workflow might look like. NVIDIA has a great example on their technical blog titled Build an Agentic Video Workflow with Video Search and Summarization. Picture a set of agents acting in different capacities, or as different tools, supporting a larger activity. Communication between them can be over the Model Context Protocol (MCP) and/or Agent2Agent (A2A) protocols with a network of prompts begetting prompts, and an eventual outcome. For a sidecar model, each agent would have an attached security agent with a separate amount of CPU/GPU, memory, etc… that would run its own low cost LLM and evaluate the prompts for security considerations. There could be a central repository of rules each security agent could access, shared “memory” in a cache to maintain context, and communication that empowers the security agent to stop further processing if a problem is identified.

In this framework, security agents can run on cheaper hardware with purpose built, less expensive generative models and can maintain the system’s resilience by choosing between a fail-open or fail-closed approach if the security agent doesn’t respond in time or stops working. This very much mirrors the attributes of a sidecar for modern containers.

The history of container security provides a valuable roadmap for identifying a potential future to address the security challenges of generative AI. By learning from these past lessons, embracing tools fit for purpose, adopting security approaches for non-deterministic workloads, and moving towards embedded security within AI systems, we can build a more secure and resilient future for generative AI. We are still early days, but these insights give us ability to “jump the S-curve” in building the secure foundations for the future. If you want to discuss this more or connect with us about helping you achieve this transformation, please reach out to questions@generativesecurity.ai.

About the author

Michael Wasielewski is the founder and lead of Generative Security. With 20+ years of experience in networking, security, cloud, and enterprise architecture Michael brings a unique perspective to new technologies. Working on generative AI security for the past 3 years, Michael connects the dots between the organizational, the technical, and the business impacts of generative AI security. Michael looks forward to spending more time golfing, swimming in the ocean, and skydiving... someday.