In one of our first blog posts, we introduced the concept of 4 intersections between generative AI and security: Platform level risks and Systemic level risks as part of the Security of Gen AI; and the Empowerment of security and Gen AI powered threats as part of Gen AI in Security. Over the next few blogs, we will dive into each of these topics to better explain what they mean for you, and how you should consider them as part of your enterprise and IT risk management. This isn’t meant to be an exhaustive dive into the nitty gritty details, but just to get you thinking about these topics individually. First up – Security of Gen AI: Platform level risks!

When we talk about generative AI, it's crucial to recognize that it operates within a broader ecosystem. If you think of a complete system, generative AI needs to be secured both as a standalone entity and as an integral part of a larger system. Consider, for example, this Google Cloud architecture for a Vertex AI powered chat bot connected to BigQuery. Even without having a front-end to secure, you still have API’s, databases, models, and search functionality you need to deploy adequate security controls around. Then, layer on the model security, data security, and prompt security necessary for the LLM to operate safely. This dual perspective ensures that we address the unique security challenges posed by generative AI at every level.

So this doesn’t turn into a 50-page whitepaper, let’s just briefly talk about the security of the system that your generative AI model supports. The foundations of identity, secure communications, data governance and security, comprehensive logging and monitoring, and automating risk discovery and remediation play an important role. Cloud providers have put together fantastic guides to walk you through the considerations. For example:

Of course, your implementation may have additional (or less) considerations, but these are good places to start.

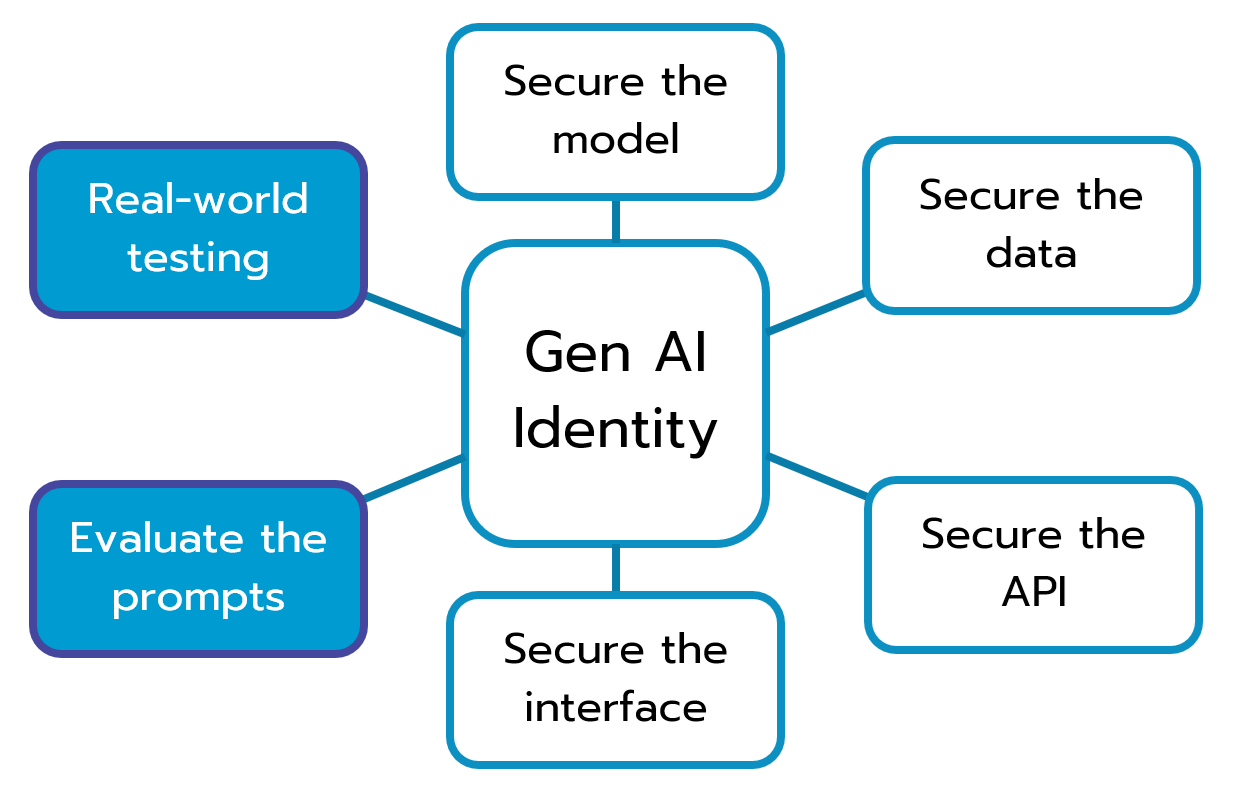

The generative models now also come with their own security considerations. Just like your databases and pipelines have their own identity, network, and encryption security wrapped into their design, your generative AI models must as well. Again, we’ll stay high level for now, but we see 7 different conceptual areas where you need to be intentional about security.

Absolutely not to be forgotten, security automation, monitoring, and remediation are also key considerations. It is not mentioned as its own area though, because it is a common thread through all of them. A modern SOC might need to have some new capabilities to understand the security signals from these types of new attack paths.

If you’re interested in diving into any of these areas more deeply, don’t hesitate to reach out to us at questions@generativesecurity.ai and we can set up a quick conversation or a deeper engagement as you would prefer.

The next blog will expand the funnel so to speak, and talk about the Systemic level risks facing organizations as they adopt generative AI tools into their toolbelt. Think of things like what happens when you have 3 different chat bots with access to 3 different data stores – can someone put together that information to learn something you didn’t intend to share; or governance and legal exposure when training regional models with global data. We’ll expand on these risk next. Hope to see you there.

About the author

Michael Wasielewski is the founder and lead of Generative Security. With 20+ years of experience in networking, security, cloud, and enterprise architecture Michael brings a unique perspective to new technologies. Working on generative AI security for the past 3 years, Michael connects the dots between the organizational, the technical, and the business impacts of generative AI security. Michael looks forward to spending more time golfing, swimming in the ocean, and skydiving... someday.